In my experience, the supermajority of engagement on viral AI Facebook pages is just as artificially-generated as the content they publish.

Whether it's a child transforming into a water bottle cyborg, a three-armed flight attendant rescuing Tiger Jesus from a muddy plane crash, or a hybrid human-monkey baby being stung to death by giant hornets, all tend to have copy+pasted captions, reactions & comments which usually make no sense in the observed context.

I've noticed similar patterns on YouTube. Sometime the comments include timestamp links which makes them seem more credible, but upon further inspection, it's all bot activity.

https://orgmode.org/manual/Capture-templates.html

Testing org-capture template generated response file

https://maggieappleton.com/home-cooked-software

The emerging golden age of home-cooked software, barefoot developers, and why the local-first community should help build it

This is a talk I presented Local-first Conference in Berlin, May 2024. It's specifically directed at the local-first community, but its relevant to anyone involved in building software.

For the last ~year I've been keeping a close eye on how language models capabilities meaningfully change the speed, ease, and accessibility of software development. The slightly bold theory I put forward in this talk is that we're on a verge of a golden age of local, home-cooked software and a new kind of developer – what I've called the barefoot developer.

https://huggingface.co/collections/facebook/llm-compiler-667c5b05557fe99a9edd25cb

Large Language Models (LLMs) have demonstrated remarkable capabilities across a variety of software engineering and coding tasks. However, their application in the domain of code and compiler optimization remains underexplored. Training LLMs is resource-intensive, requiring substantial GPU hours and extensive data collection, which can be prohibitive. To address this gap, we introduce Meta Large Language Model Compiler (LLM Compiler), a suite of robust, openly available, pre-trained models specifically designed for code optimization tasks. Built on the foundation of Code Llama, LLM Compiler enhances the understanding of compiler intermediate representations (IRs), assembly language, and optimization techniques. The model has been trained on a vast corpus of 546 billion tokens of LLVM-IR and assembly code and has undergone instruction fine-tuning to interpret compiler behavior. LLM Compiler is released under a bespoke commercial license to allow wide reuse and is available in two sizes: 7 billion and 13 billion parameters. We also present fine-tuned versions of the model, demonstrating its enhanced capabilities in optimizing code size and disassembling from x86_64 and ARM assembly back into LLVM-IR. These achieve 77% of the optimising potential of an autotuning search, and 45% disassembly round trip (14% exact match). This release aims to provide a scalable, cost-effective foundation for further research and development in compiler optimization by both academic researchers and industry practitioners.

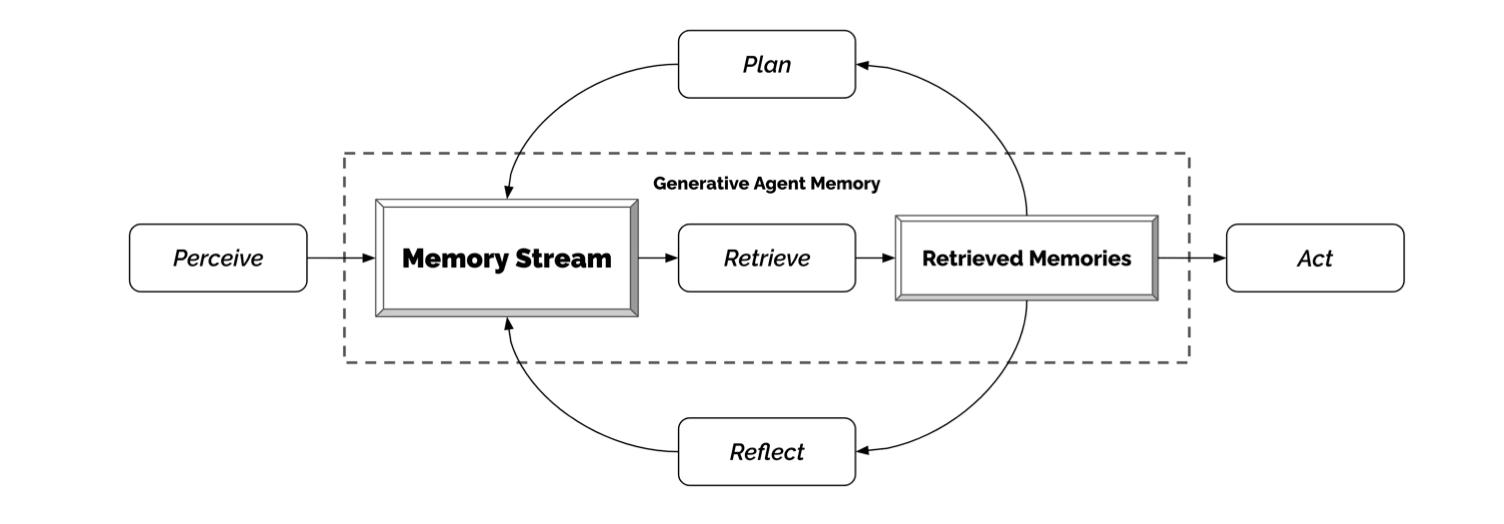

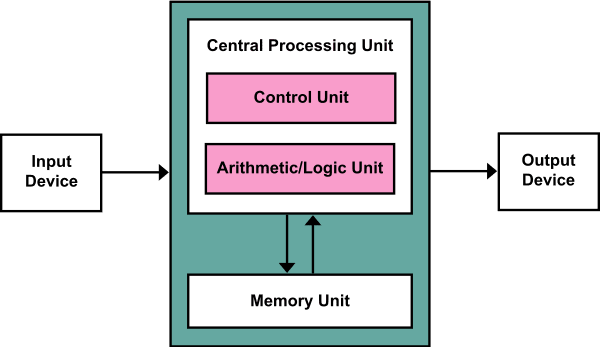

Nice job by Cal debunking misconceptions about AI model capabilities. The segment highlights a few points I cover in my unpublished NoLM - Not Only Language Models blog post. Specifically the fact that Language Models on their own can't do much and need to be connected to data sources and other systems. Complex AI systems will be built with more specialized roles and leverage various components for their planning and execution. In the end though, models will require integration into existing systems. Those integrations need to be done by people, meaning humans are still in control of the AI-assisted system capabilities.

Later in the podcast, Cal takes a question about distributed webs of trust. I agree with Cal's point of using existing open standards like RSS for content consumption. It's the reason you often hear the phrase, "or wherever you get your podcasts". Assuming you have a program that can read an RSS feed, you can follow all types of content. On the topic of discovery, Cal makes the suggestion of using distributed webs of trust. Using domain names and linking as ways of discovering content. While blogrolls were not directly called out, it's one of the benefits a curated set of links provides.

https://ntietz.com/blog/blogging-affirmations/

The affirmations

Here are the things I've seen and learned. Each of these will be expanded in its own section.

- You have things to write about.

- Your perspective matters.

- You are good enough.

- Posts don't have to be novel.

- People will read it.

- Mistakes are okay!

- It's okay to ask for things.

- You can get started quickly.

- You can write on a schedule.

Despite the odds, cassette tapes are making a comeback. And one family-owned company in Springfield, Missouri is a leader in the revival.

Hopefully this also means that players are also making a comeback because you need something to play them on. Having visited a museum recently full of old radio recordings on cassette tapes, I was sad to find out I couldn't listen to them because the tape player was broken. It's hard enough finding a decent MP3 player, I'm sure it must be just as hard if not harder finding a tape player.

https://www.modular.com/blog/deep-dive-into-ownership-in-mojo

In the second part of the ownership series in Mojo, we built on the mental model developed in the first part and provided practical examples to illustrate how ownership works in Mojo. We covered the different kinds of values (BValue, LValue, and RValue) and how they propagate through expressions. We also explained the function argument conventions (borrowed, inout, owned) and demonstrated how these conventions help manage memory safely and efficiently. We concluded with three fundamental rules:

- Rule 1: Owned arguments take RValue on the caller side but are LValue on the callee side.

- Rule 2: Owned arguments own the type if the transfer operator ^ is used; otherwise, they copy the type if it is Copyable.

- Rule 3: Copy operations are optimized to move operations if the type is Copyable and Movable and isn’t used anymore, reducing unnecessary overhead.

Lastly, we emphasized that the main goals of ownership in Mojo are:

- Memory Safety: Enforcing exclusive ownership and proper lifetimes to prevent memory errors such as use-after-free and double-free.

- Performance Optimization: Converting unnecessary copy operations into move operations to reduce overhead and enhance performance.

- Ease of Use: Automating memory management through ownership rules and the transfer operator, simplifying development.

- Compile-Time Guarantees: Providing strong compile-time guarantees through type-checking and dataflow lifetime analysis, catching errors early in the development process.

https://www.eff.org/deeplinks/2024/05/bigfoot

I’m proud to share the first post in a series from our friends, The Encryptids—the rarely-seen enigmas who inspire campfire lore. But this time, they’re spilling secrets about how they survive this ever-digital world. We begin by checking in with the legendary Bigfoot de la Sasquatch...

People say I'm the most famous of The Encryptids, but sometimes I don't want the spotlight. They all want a piece of me: exes, ad trackers, scammers, even the government. A picture may be worth a thousand words, but my digital profile is worth cash (to skeezy data brokers). I can’t hit a city block without being captured by doorbell cameras, CCTV, license plate readers, and a maze of street-level surveillance. It can make you want to give up on privacy altogether. Honey, no. Why should you have to hole up in some dank, busted forest for freedom and respect? You don’t.

Privacy isn't about hiding. It's about revealing what you want to who you want on your terms. It's your basic right to dignity.

https://www.anthropic.com/research/mapping-mind-language-model

Today we report a significant advance in understanding the inner workings of AI models. We have identified how millions of concepts are represented inside Claude Sonnet, one of our deployed large language models. This is the first ever detailed look inside a modern, production-grade large language model. This interpretability discovery could, in future, help us make AI models safer.

Previously, we made some progress matching patterns of neuron activations, called features, to human-interpretable concepts. We used a technique called "dictionary learning", borrowed from classical machine learning, which isolates patterns of neuron activations that recur across many different contexts. In turn, any internal state of the model can be represented in terms of a few active features instead of many active neurons. Just as every English word in a dictionary is made by combining letters, and every sentence is made by combining words, every feature in an AI model is made by combining neurons, and every internal state is made by combining features.

In October 2023, we reported success applying dictionary learning to a very small "toy" language model and found coherent features corresponding to concepts like uppercase text, DNA sequences, surnames in citations, nouns in mathematics, or function arguments in Python code.

We used the same scaling law philosophy that predicts the performance of larger models from smaller ones to tune our methods at an affordable scale before launching on Sonnet.

We successfully extracted millions of features from the middle layer of Claude 3.0 Sonnet, (a member of our current, state-of-the-art model family, currently available on claude.ai), providing a rough conceptual map of its internal states halfway through its computation. This is the first ever detailed look inside a modern, production-grade large language model.

https://www.anthropic.com/research/claude-character

Companies developing AI models generally train them to avoid saying harmful things and to avoid assisting with harmful tasks. The goal of this is to train models to behave in ways that are "harmless". But when we think of the character of those we find genuinely admirable, we don’t just think of harm avoidance. We think about those who are curious about the world, who strive to tell the truth without being unkind, and who are able to see many sides of an issue without becoming overconfident or overly cautious in their views. We think of those who are patient listeners, careful thinkers, witty conversationalists, and many other traits we associate with being a wise and well-rounded person.

AI models are not, of course, people. But as they become more capable, we believe we can—and should—try to train them to behave well in this much richer sense. Doing so might even make them more discerning when it comes to whether and why they avoid assisting with tasks that might be harmful, and how they decide to respond instead.

Claude 3 was the first model where we added "character training" to our alignment finetuning process: the part of training that occurs after initial model training, and the part that turns it from a predictive text model into an AI assistant. The goal of character training is to make Claude begin to have more nuanced, richer traits like curiosity, open-mindedness, and thoughtfulness.

Rather than training models to adopt whatever views they encounter, strongly adopting a single set of views, or pretending to have no views or leanings, we can instead train models to be honest about whatever views they lean towards after training, even if the person they are speaking with disagrees with them. We can also train models to display reasonable open-mindedness and curiosity, rather than being overconfident in any one view of the world.

In order to steer Claude’s character and personality, we made a list of many character traits we wanted to encourage the model to have...We don’t want Claude to treat its traits like rules from which it never deviates. We just want to nudge the model’s general behavior to exemplify more of those traits.

Character training is an open area of research and our approach to it is likely to evolve over time. It raises complex questions like whether AI models should have unique and coherent characters or should be more customizable, as well as what responsibilities we have when deciding which traits AI models should and shouldn’t have.

https://github.com/fixie-ai/ultravox

Ultravox is a new kind of multimodal LLM that can understand text as well as human speech, without the need for a separate Audio Speech Recognition (ASR) stage. Building on research like AudioLM, SeamlessM4T, Gazelle, SpeechGPT, and others, we've extended Meta's Llama 3 model with a multimodal projector that converts audio directly into the high-dimensional space used by Llama 3. This direct coupling allows Ultravox to respond much more quickly than systems that combine separate ASR and LLM components. In the future this will also allow Ultravox to natively understand the paralinguistic cues of timing and emotion that are omnipresent in human speech.

The current version of Ultravox (v0.1), when invoked with audio content, has a time-to-first-token (TTFT) of approximately 200ms, and a tokens-per-second rate of ~100, all using a Llama 3 8B backbone. While quite fast, we believe there is considerable room for improvement in these numbers. We look forward to working with LLM hosting providers to deliver state-of-the-art performance for Ultravox.

Ultravox currently takes in audio and emits streaming text. As we evolve the model, we'll train it to be able to emit a stream of speech tokens that can then be converted directly into raw audio by an appropriate unit vocoder. We're interested in working with interested parties to build this functionality!

https://security.apple.com/blog/private-cloud-compute/

We set out to build Private Cloud Compute with a set of core requirements:

- Stateless computation on personal user data. ...we want a strong form of stateless data processing where personal data leaves no trace in the PCC system.

- Enforceable guarantee. Security and privacy guarantees are strongest when they are entirely technically enforceable, which means it must be possible to constrain and analyze all the components that critically contribute to the guarantees of the overall Private Cloud Compute system.

- No privileged runtime access. Private Cloud Compute must not contain privileged interfaces that would enable Apple’s site reliability staff to bypass PCC privacy guarantees, even when working to resolve an outage or other severe incident.

- Non-targetability. An attacker should not be able to attempt to compromise personal data that belongs to specific, targeted Private Cloud Compute users without attempting a broad compromise of the entire PCC system.

- Verifiable transparency. Security researchers need to be able to verify, with a high degree of confidence, that our privacy and security guarantees for Private Cloud Compute match our public promises.

https://machinelearning.apple.com/research/introducing-apple-foundation-models

Apple Intelligence is comprised of multiple highly-capable generative models that are specialized for our users’ everyday tasks, and can adapt on the fly for their current activity. The foundation models built into Apple Intelligence have been fine-tuned for user experiences such as writing and refining text, prioritizing and summarizing notifications, creating playful images for conversations with family and friends, and taking in-app actions to simplify interactions across apps.

In the following overview, we will detail how two of these models — a ~3 billion parameter on-device language model, and a larger server-based language model available with Private Cloud Compute and running on Apple silicon servers — have been built and adapted to perform specialized tasks efficiently, accurately, and responsibly. These two foundation models are part of a larger family of generative models created by Apple to support users and developers; this includes a coding model to build intelligence into Xcode, as well as a diffusion model to help users express themselves visually, for example, in the Messages app.

Pre-training

Our foundation models are trained on Apple's AXLearn framework, an open-source project we released in 2023. It builds on top of JAX and XLA, and allows us to train the models with high efficiency and scalability on various training hardware and cloud platforms, including TPUs and both cloud and on-premise GPUs. We used a combination of data parallelism, tensor parallelism, sequence parallelism, and Fully Sharded Data Parallel (FSDP) to scale training along multiple dimensions such as data, model, and sequence length.

Post-training

We find that data quality is essential to model success, so we utilize a hybrid data strategy in our training pipeline, incorporating both human-annotated and synthetic data, and conduct thorough data curation and filtering procedures. We have developed two novel algorithms in post-training: (1) a rejection sampling fine-tuning algorithm with teacher committee, and (2) a reinforcement learning from human feedback (RLHF) algorithm with mirror descent policy optimization and a leave-one-out advantage estimator. We find that these two algorithms lead to significant improvement in the model’s instruction-following quality.

Optimization

Both the on-device and server models use grouped-query-attention. We use shared input and output vocab embedding tables to reduce memory requirements and inference cost. These shared embedding tensors are mapped without duplications. The on-device model uses a vocab size of 49K, while the server model uses a vocab size of 100K, which includes additional language and technical tokens.

For on-device inference, we use low-bit palletization, a critical optimization technique that achieves the necessary memory, power, and performance requirements. To maintain model quality, we developed a new framework using LoRA adapters that incorporates a mixed 2-bit and 4-bit configuration strategy — averaging 3.5 bits-per-weight — to achieve the same accuracy as the uncompressed models.

Additionally, we use an interactive model latency and power analysis tool, Talaria, to better guide the bit rate selection for each operation. We also utilize activation quantization and embedding quantization, and have developed an approach to enable efficient Key-Value (KV) cache update on our neural engines.

Model Adaptation

Our foundation models are fine-tuned for users’ everyday activities, and can dynamically specialize themselves on-the-fly for the task at hand...

We represent the values of the adapter parameters using 16 bits, and for the ~3 billion parameter on-device model, the parameters for a rank 16 adapter typically require 10s of megabytes. The adapter models can be dynamically loaded, temporarily cached in memory, and swapped — giving our foundation model the ability to specialize itself on the fly for the task at hand while efficiently managing memory and guaranteeing the operating system's responsiveness.

Performance and Evaluation

We compare our models with both open-source models (Phi-3, Gemma, Mistral, DBRX) and commercial models of comparable size (GPT-3.5-Turbo, GPT-4-Turbo)1. We find that our models are preferred by human graders over most comparable competitor models. On this benchmark, our on-device model, with ~3B parameters, outperforms larger models including Phi-3-mini, Mistral-7B, and Gemma-7B. Our server model compares favorably to DBRX-Instruct, Mixtral-8x22B, and GPT-3.5-Turbo while being highly efficient.

To further evaluate our models, we use the Instruction-Following Eval (IFEval) benchmark to compare their instruction-following capabilities with models of comparable size. The results suggest that both our on-device and server model follow detailed instructions better than the open-source and commercial models of comparable size.

https://www.apple.com/newsroom/2024/06/introducing-apple-intelligence-for-iphone-ipad-and-mac/

Apple today introduced Apple Intelligence, the personal intelligence system for iPhone, iPad, and Mac that combines the power of generative models with personal context to deliver intelligence that’s incredibly useful and relevant.

Apple Intelligence unlocks new ways for users to enhance their writing and communicate more effectively. With brand-new systemwide Writing Tools built into iOS 18, iPadOS 18, and macOS Sequoia, users can rewrite, proofread, and summarize text nearly everywhere they write...

Apple Intelligence powers exciting image creation capabilities to help users communicate and express themselves in new ways. With Image Playground, users can create fun images in seconds, choosing from three styles: Animation, Illustration, or Sketch...All images are created on device, giving users the freedom to experiment with as many images as they want.

Powered by Apple Intelligence, Siri becomes more deeply integrated into the system experience. With richer language-understanding capabilities, Siri is more natural, more contextually relevant, and more personal, with the ability to simplify and accelerate everyday tasks.

A cornerstone of Apple Intelligence is on-device processing, and many of the models that power it run entirely on device. To run more complex requests that require more processing power, Private Cloud Compute extends the privacy and security of Apple devices into the cloud to unlock even more intelligence.

Apple is integrating ChatGPT access into experiences within iOS 18, iPadOS 18, and macOS Sequoia, allowing users to access its expertise — as well as its image- and document-understanding capabilities — without needing to jump between tools.

https://bair.berkeley.edu/blog/2024/05/29/tiny-agent/

The ability of LLMs to execute commands through plain language (e.g. English) has enabled agentic systems that can complete a user query by orchestrating the right set of tools...recent multi-modal efforts such as the GPT-4o or Gemini-1.5 model, has expanded the realm of possibilities with AI agents. While this is quite exciting, the large model size and computational requirements of these models often requires their inference to be performed on the cloud. This can create several challenges for their widespread adoption. First and foremost, uploading data such as video, audio, or text documents to a third party vendor on the cloud, can result in privacy issues. Second, this requires cloud/Wi-Fi connectivity which is not always possible...latency could also be an issue as uploading large amounts of data to the cloud and waiting for the response could slow down response time, resulting in unacceptable time-to-solution. These challenges could be solved if we deploy the LLM models locally at the edge.

...current LLMs like GPT-4o or Gemini-1.5 are too large for local deployment. One contributing factor is that a lot of the model size ends up memorizing general information about the world into its parametric memory which may not be necessary for a specialized downstream application.

...this leads to an intriguing research question:

Can a smaller language model with significantly less parametric memory emulate such emergent ability of these larger language models?

Achieving this would significantly reduce the computational footprint of agentic systems and thus enable efficient and privacy-preserving edge deployment. Our study demonstrates that this is feasible for small language models through training with specialized, high-quality data that does not require recalling generic world knowledge.

Such a system could particularly be useful for semantic systems where the AI agent’s role is to understand the user query in natural language and, instead of responding with a ChatGPT-type question answer response, orchestrate the right set of tools and APIs to accomplish the user’s command. For example, in a Siri-like application, a user may ask a language model to create a calendar invite with particular attendees. If a predefined script for creating calendar items already exists, the LLM simply needs to learn how to invoke this script with the correct input arguments (such as attendees’ email addresses, event title, and time). This process does not require recalling/memorization of world knowledge from sources like Wikipedia, but rather requires reasoning and learning to call the right functions and to correctly orchestrate them.

Our goal is to develop Small Language Models (SLM) that are capable of complex reasoning that could be deployed securely and privately at the edge. Here we will discuss the research directions that we are pursuing to that end. First, we discuss how we can enable small open-source models to perform accurate function calling, which is a key component of agentic systems. It turns out that off-the-shelf small models have very low function calling capabilities. We discuss how we address this by systematically curating high-quality data for function calling, using a specialized Mac assistant agent as our driving application. We then show that fine-tuning the model on this high quality curated dataset, can enable SLMs to even exceed GPT-4-Turbo’s function calling performance. We then show that this could be further improved and made efficient through a new Tool RAG method. Finally, we show how the final models could be deployed efficiently at the edge with real time responses.

https://genai-handbook.github.io/

This document aims to serve as a handbook for learning the key concepts underlying modern artificial intelligence systems. Given the speed of recent development in AI, there really isn’t a good textbook-style source for getting up-to-speed on the latest-and-greatest innovations in LLMs or other generative models, yet there is an abundance of great explainer resources (blog posts, videos, etc.) for these topics scattered across the internet. My goal is to organize the “best” of these resources into a textbook-style presentation, which can serve as a roadmap for filling in the prerequisites towards individual AI-related learning goals. My hope is that this will be a “living document”, to be updated as new innovations and paradigms inevitably emerge, and ideally also a document that can benefit from community input and contribution. This guide is aimed at those with a technical background of some kind, who are interested in diving into AI either out of curiosity or for a potential career. I’ll assume that you have some experience with coding and high-school level math, but otherwise will provide pointers for filling in any other prerequisites.

Cool project.

Turn a fresh Ubuntu installation into a fully-configured, beautiful, and modern web development system by running a single command.

Omakub is an opinionated take on what Linux can be at its best.

Omakub includes a curated set of applications and tools that one might discover through hours of watching YouTube, reading blogs, or just stumbling around Linux internet. All so someone coming straight from a platform like Windows or the Mac can immediately start enjoying a ready-made system, without having to do any configuration and curation legwork at all.

I'm guilty of owning way too many domains but this short-hand (lqdev.me) and other permadomain (luisquintanilla.me) are the ones I use mostly.

A potential happy medium I've seen is using subdomains (i.e. project.lqdev.me).

Jim Nielsen has great examples of how he's doing that today. Though that's an older post, he also addressed it most recently in the post Domain Sins of My Youth.

That somehow feels better than the URL you get when you use free hosting like GitHub Pages. As a bonus, it's already tied to your identity (if using your personal domain). Also, you save some money.

There's a few ways I practice this today. In addition to the previously mentioned domains, I also own lqdev.tech. Services and projects I host live there.

For example:

- Mastodon Instance (toot.lqdev.tech)

- Matrix server (matrix.lqdev.tech)

- Webmentions service (webmentions.lqdev.tech)

So far it's been working well.

https://www.manton.org/2024/05/29/podcast-hosting-for.html

This is cool! The Micro.blog premium plan has tons of great features as well.

Six years ago, we launched our $10/month plan with podcast hosting. Since then we’ve added several big features to the plan...

Today, I want to bring the podcast feature to more people, so we’re moving it down to the standard $5/month plan.

https://github.com/karpathy/llm.c/discussions/481

...the TLDR is that we're training a 12-layer GPT-2 (124M), from scratch, on 10B tokens of FineWeb, with max sequence length of 1024 tokens.

The 124M model is the smallest model in the GPT-2 series released by OpenAI in 2019, and is actually quite accessible today, even for the GPU poor. With llm.c, which is quite efficient at up to ~60% model flops utilization, reproducing this model on one 8X A100 80GB SXM node takes ~90 minutes. For example, on Lambda this node goes for ~$14/hr, so the total cost of reproducing this model today is about $20. You can train the model with a single GPU too, it would just take proportionally longer (e.g. ~4-24 hours depending on the GPU).

21 years since Mike and I did the first release of WordPress, forking Michel’s work on b2/cafélog.

I’ve been thinking a lot about elements that made WordPress successful in its early years that we should keep in mind as we build this year and beyond. Here’s 11 opinions:

- Simple things should be easy and intuitive, and complex things possible.

...- Wikis are amazing, and our documentation should be wiki-easy to edit.

...- It’s important that we all do support, go to meetups and events, anything we can to stay close to regular end-users of what we make.

Congrats to WordPress and the team on 21 years. I think the following are some good elements to keep building on.

https://www.theverge.com/2024/5/23/24163225/daylight-dc1-tablet-livepaper

Like many in the comments, I like the idea of this device and what it's aiming to do.

The "LivePaper" display technology seems interesting.

At $729 though, it seems a little steep. I paid a fraction of that for my Onyx Boox Nova Air 2 and I have most of the functionality of this device. As an e-reader, note-taking device, and tablet that can install any Android app, it works perfectly fine for my use cases and I'm very happy with it.

Still, I'm interested in seeing whether this takes off or whether it ends up being another Rabbit R1 or Humane Ai Pin.

🙋

Great post.

One of the challenges I've found, even when using appliances like those from hosting providers like Linode or tools like YunoHost is connecting them to your domain.

Usually, that's specific to your domain name provider and usually a manual process.

In cases where that's easy, you're often overcharged for the convenience. Compared to "free" social media and publishing websites, it makes using your own domain a less desirable option.

Maybe advocating for a multi-staged approach like IndieWebify.Me could make the journey more approachable. More importantly, making it a journey, rather than a destination could help with meeting folks where they are and guide them closer towards their goals.

In any case, the proposed list of goals seem like a great start towards helping people create their own place on the web.

https://www.theverge.com/2024/5/24/24163865/doge-meme-shiba-inu-kabosu-dead-crypto

The face of one of the defining memes of the 2010s, the doge meme, died on Friday. Kabosu, the shiba inu with the knowing face that launched a million internet jokes, was 18 when she died

Between this article and The Dumbphone Boom is Real, I'm seeing more publications on the subject. Far from a comeback, but still cool to see.

https://kamasiwashington.ffm.to/fearlessmovement

2024 just keeps getting better in terms of new music releases.

Kamasi Washington has a new album coming out this week called Fearless Movement.

The most recent single, Dream State, with Andre 3000 is great.

https://www.newyorker.com/culture/infinite-scroll/the-dumbphone-boom-is-real

Will Stults spent too much time on his iPhone, doom-scrolling the site formerly known as Twitter and tweeting angrily at Elon Musk as if the billionaire would actually notice. Stults’s partner, Daisy Krigbaum, was addicted to Pinterest and YouTube, bingeing videos on her iPhone before going to sleep. Two years ago, they both tried Apple’s Screen Time restriction tool and found it too easy to disable, so the pair decided to trade out their iPhones for more low-tech devices. They’d heard about so-called dumbphones, which lacked the kinds of bells and whistles—a high-resolution screen, an app store, a video camera—that made smartphones so addictive. But they found the process of acquiring one hard to navigate. “The information on it was kind of disparate and hard to get to. A lot of people who know the most about dumbphones spend the least time online,” Krigbaum said. A certain irony presented itself: figuring out a way to be less online required aggressive online digging.

The growing dumbphone fervor may be motivated, in part, by the discourse around child safety online. Parents are increasingly confronted with evidence that sites like Instagram and TikTok intentionally try to hook their children. Using those sites can increase teens’ anxiety and lower their self-esteem, according to some studies, and smartphones make it so that kids are logged on constantly. Why should this situation be any healthier for adults? After almost two decades with iPhones, the public seems to be experiencing a collective ennui with digital life. So many hours of each day are lived through our portable, glowing screens, but the Internet isn’t even fun anymore. We lack the self-control to wean ourselves off, so we crave devices that actively prevent us from getting sucked into them. That means opting out of the prevailing technology and into what Cal Newport, a contributing writer for The New Yorker, has called a more considered “digital minimalism.”

While dumbphones aren't a cure-all for unhealthy technology habits, as a dumbphone user, I can relate to the frustrations that come from the lack of device availability and support. Even when new devices hit the market, they tend to be targeted towards non-US markets.

https://www.snowflake.com/blog/arctic-open-efficient-foundation-language-models-snowflake/

Today, the Snowflake AI Research Team is thrilled to introduce Snowflake Arctic, a top-tier enterprise-focused LLM that pushes the frontiers of cost-effective training and openness. Arctic is efficiently intelligent and truly open.

- Efficiently Intelligent: Arctic excels at enterprise tasks such as SQL generation, coding and instruction following benchmarks even when compared to open source models trained with significantly higher compute budgets. In fact, it sets a new baseline for cost-effective training to enable Snowflake customers to create high-quality custom models for their enterprise needs at a low cost.

- Truly Open: Apache 2.0 license provides ungated access to weights and code. In addition, we are also open sourcing all of our data recipes and research insights.

Snowflake Arctic is available from Hugging Face, NVIDIA API catalog and Replicate today or via your model garden or catalog of choice, including Snowflake Cortex, Amazon Web Services (AWS), Microsoft Azure, Lamini, Perplexity and Together over the coming days.

https://www.theverge.com/2024/4/24/24139057/pbs-retro-free-roku-channel-fast-streaming

PBS is making child edutainment classics like Zoboomafoo, Mister Rogers’ Neighborhood, and Reading Rainbow available for free on a new ‘PBS Retro’ channel on Roku.

This is cool! Although I'm kind of bummed the stuff I grew up watching is now considered "retro".

https://www.noemamag.com/we-need-to-rewild-the-internet/

The internet has become an extractive and fragile monoculture. But we can revitalize it using lessons learned by ecologists.

Our online spaces are not ecosystems, though tech firms love that word. They’re plantations; highly concentrated and controlled environments...

We all know this. We see it each time we reach for our phones. But what most people have missed is how this concentration reaches deep into the internet’s infrastructure — the pipes and protocols, cables and networks, search engines and browsers. These structures determine how we build and use the internet, now and in the future.

They’ve concentrated into a series of near-planetary duopolies.

Two kinds of everything may be enough to fill a fictional ark and repopulate a ruined world, but can’t run an open, global “network of networks” where everyone has the same chance to innovate and compete.

The internet made the tech giants possible. Their services have scaled globally, via its open, interoperable core. But for the past decade, they’ve also worked to enclose the varied, competing and often open-source or collectively provided services the internet is built on into their proprietary domains. Although this improves their operational efficiency, it also ensures that the flourishing conditions of their own emergence aren’t repeated by potential competitors. For tech giants, the long period of open internet evolution is over. Their internet is not an ecosystem. It’s a zoo.

Up close, internet concentration seems too intricate to untangle; from far away, it seems too difficult to deal with. But what if we thought of the internet not as a doomsday “hyperobject,” but as a damaged and struggling ecosystem facing destruction? What if we looked at it not with helpless horror at the eldritch encroachment of its current controllers, but with compassion, constructiveness and hope?

Rewilding “aims to restore healthy ecosystems by creating wild, biodiverse spaces,” according to the International Union for Conservation of Nature. More ambitious and risk-tolerant than traditional conservation, it targets entire ecosystems to make space for complex food webs and the emergence of unexpected interspecies relations. It’s less interested in saving specific endangered species. Individual species are just ecosystem components, and focusing on components loses sight of the whole. Ecosystems flourish through multiple points of contact between their many elements, just like computer networks. And like in computer networks, ecosystem interactions are multifaceted and generative.

Whatever we do, the internet isn’t returning to old-school then-common interfaces like FTP and Gopher, or organizations operating their own mail servers again instead of off-the-shelf solutions like G-Suite. But some of what we need is already here, especially on the web. Look at the resurgence of RSS feeds, email newsletters and blogs, as we discover (yet again) that relying on one app to host global conversations creates a single point of failure and control. New systems are growing, like the Fediverse with its federated islands, or Bluesky with algorithmic choice and composable moderation.

We don’t know what the future holds. Our job is to keep open as much opportunity as we can, trusting that those who come later will use it. Instead of setting purity tests for which kind of internet is most like the original, we can test changes against the values of the original design. Do new standards protect the network’s “generality,” i.e. its ability to support multiple uses, or is functionality limited to optimize efficiency for the biggest tech firms?

...our internet took off because it was designed as a general-purpose network, built to connect anyone.

Our internet was built to be complex and unbiddable, to do things we cannot yet imagine.

Internet infrastructure is a degraded ecosystem, but it’s also a built environment, like a city. Its unpredictability makes it generative, worthwhile and deeply human.

We need to stop thinking of internet infrastructure as too hard to fix. It’s the underlying system we use for nearly everything we do.

Rewilding the internet connects and grows what people are doing across regulation, standards-setting and new ways of organizing and building infrastructure, to tell a shared story of where we want to go. It’s a shared vision with many strategies. The instruments we need to shift away from extractive technological monocultures are at hand or ready to be built.

Calculus Made Easy is a book on calculus originally published in 1910 by Silvanus P. Thompson, considered a classic and elegant introduction to the subject.

https://projects.kwon.nyc/internet-is-fun/

I’ve been meaning to write some kind of Important Thinkpiece™ on the glory days of the early internet, but every time I sit down to do it, I find another, better piece that someone else has already written. So for now, here’s a collection of articles that to some degree answer the question “Why have a personal website?” with “Because it’s fun, and the internet used to be fun.”

This is a great catalog of posts about the personal web. Courtesy of Rachel Kwon

This is a site encouraging non-technical people and organisations to create their own online services such as websites, social networks, personal clouds, instant messaging etc.

Large language models (LLMs) have revolutionized a wide range of tasks and applications that were previously reliant on manually crafted machine learning (ML) solutions, streamlining through automation. However, despite these advances, a notable challenge persists: the need for extensive prompt engineering to adapt these models to new tasks. New generations of language models like GPT-4 and Mixtral 8x7B advance the capability to process long input texts. This progress enables the use of longer inputs, providing richer context and detailed instructions to language models. A common technique that uses this enhanced capacity is the Retrieval Augmented Generation (RAG) approach. RAG dynamically incorporates information into the prompt based on the specific input example.

To address these challenges, we developed the Structure-Aware Multi-objective Metaprompt Optimization (SAMMO) framework. SAMMO is a new open-source tool that streamlines the optimization of prompts, particularly those that combine different types of structural information like in the RAG example above. It can make structural changes, such as removing entire components or replacing them with different ones. These features enable AI practitioners and researchers to efficiently refine their prompts with little manual effort.

Central to SAMMO’s innovation is its approach to treating prompts not just as static text inputs but as dynamic, programmable entities—metaprompts. SAMMO represents these metaprompts as function graphs, where individual components and substructures can be modified to optimize performance, similar to the optimization process that occurs during traditional program compilation.

The following key features contribute to SAMMO’s effectiveness:

- Structured optimization: Unlike current methods that focus on text-level changes, SAMMO focuses on optimizing the structure of metaprompts. This granular approach facilitates precise modifications and enables the straightforward integration of domain knowledge, for instance, through rewrite operations targeting specific stylistic objectives.

- Multi-objective search: SAMMO’s flexibility enables it to simultaneously address multiple objectives, such as improving accuracy and computational efficiency. Our paper illustrates how SAMMO can be used to compress prompts without compromising their accuracy.

- General purpose application: SAMMO has proven to deliver significant performance improvements across a variety of tasks, including instruction tuning, RAG, and prompt compression.

Reading this post and attached image brings back so many memories.

I remember in the last few years of Blockbuster and early days of Netflix, for about $20/month you could rent unlimited movies (I think it was up to three at a time).

If you binge watched them or weren't happy with the choices you made, you could just drive down to your local store, return them, and grab a new set of movies.

Initially you had a return period, but towards the end, there were none so you basically got to keep some movies as long as you liked.

Good times.

https://activitypub.ghost.org/

In 2024, Ghost is adopting ActivityPub and connecting with other federated platforms across the web.

This means that, soon, Ghost publishers will be able to follow, like and interact with one another in the same way that you would normally do on a social network — but on your own website.

The difference, of course, is that you’ll also be able to follow, like, and interact with users on Mastodon, Threads, Flipboard, Buttondown, WriteFreely, Tumblr, WordPress, PeerTube, Pixelfed... or any other platform that has adopted ActivityPub, too. You don’t need to limit yourself to following people who happen to use the same platform as you.

For the past few years the choice has been difficult. Either participate in closed networks at the mercy of algorithms, or set up an independent website at the expense of your growth.

Email gave us private messaging technology that isn’t owned by a single company.

ActivityPub is doing the same for social technology.

The open web is coming back, and with it returns diversity. You can both publish independently and grow faster than ever before with followers from all over the world & the web.

I can't express how much I love this. Personally I don't use Ghost, but given platforms like WordPress and now Ghost are adding support for ActivityPub, it empowers people to build their own platforms.

That said, this still doesn't address the challenges of building your own website, which as mentioned in the post are one of the appealing aspects of current closed networks.

Still though, there is a vast number of creators, businesses, and company websites or blogs that can benefit from this today. When paired with RSS, it gives people choice and autonomy in how they create and consume content as I mentioned in a previous post, Rediscovering the RSS protocol

https://github.com/google-deepmind/penzai

A JAX research toolkit for building, editing, and visualizing neural networks.

Penzai is a JAX library for writing models as legible, functional pytree data structures, along with tools for visualizing, modifying, and analyzing them. Penzai focuses on making it easy to do stuff with models after they have been trained, making it a great choice for research involving reverse-engineering or ablating model components, inspecting and probing internal activations, performing model surgery, debugging architectures, and more. (But if you just want to build and train a model, you can do that too!)

https://azure.microsoft.com/en-us/blog/introducing-phi-3-redefining-whats-possible-with-slms/

Starting today, Phi-3-mini, a 3.8B language model is available on Microsoft Azure AI Studio, Hugging Face, and Ollama.

- Phi-3-mini is available in two context-length variants—4K and 128K tokens. It is the first model in its class to support a context window of up to 128K tokens, with little impact on quality.

- It is instruction-tuned, meaning that it’s trained to follow different types of instructions reflecting how people normally communicate. This ensures the model is ready to use out-of-the-box.

- It is available on Azure AI to take advantage of the deploy-eval-finetune toolchain, and is available on Ollama for developers to run locally on their laptops.

- It has been optimized for ONNX Runtime with support for Windows DirectML along with cross-platform support across graphics processing unit (GPU), CPU, and even mobile hardware.

- It is also available as an NVIDIA NIM microservice with a standard API interface that can be deployed anywhere. And has been optimized for NVIDIA GPUs.

In the coming weeks, additional models will be added to Phi-3 family to offer customers even more flexibility across the quality-cost curve. Phi-3-small (7B) and Phi-3-medium (14B) will be available in the Azure AI model catalog and other model gardens shortly.

https://llama.meta.com/llama3/

Build the future of AI with Meta Llama 3

Now available with both 8B and 70B pretrained and instruction-tuned versions to support a wide range of applications

Llama 3 models take data and scale to new heights. It’s been trained on our two recently announced custom-built 24K GPU clusters on over 15T token of data – a training dataset 7x larger than that used for Llama 2, including 4x more code. This results in the most capable Llama model yet, which supports a 8K context length that doubles the capacity of Llama 2.

http://radiobilingue.org/rb-programas/alterlatino/

While listening to KHOL earlier today, they were rebroadcasting a recording of A Todo Pulmon, a radio show from Radio Bilingue in Fresno, CA. Good stuff.

https://proton.me/blog/proton-standard-notes-join-forces

...today, we’re happy to announce that Standard Notes will also join us to advance our shared mission.

Both Proton and Standard Notes share a strong commitment to our communities, so Standard Notes will remain open source, freely available, and fully supported. Prices are not changing, and if you have a current subscription to Standard Notes, it will continue to be honored. Proton aspires to do the right thing and be a responsible home for open-source projects, and just as we did with SimpleLogin, we are committed to preserving what makes Standard Notes special and much loved.

In the coming months, we hope to find ways to make Standard Notes more easily accessible to the Proton community. This way, in addition to protecting your email, calendar, files, passwords, and online activity, you can also protect your notes.

This is another exciting acquisition! I mainly use org-mode in Emacs for note taking. However, I love the ecosystem Proton is building with their security and privacy focused set of collaborative software offerings.

https://arxiv.org/abs/2402.19427

Recurrent neural networks (RNNs) have fast inference and scale efficiently on long sequences, but they are difficult to train and hard to scale. We propose Hawk, an RNN with gated linear recurrences, and Griffin, a hybrid model that mixes gated linear recurrences with local attention. Hawk exceeds the reported performance of Mamba on downstream tasks, while Griffin matches the performance of Llama-2 despite being trained on over 6 times fewer tokens. We also show that Griffin can extrapolate on sequences significantly longer than those seen during training. Our models match the hardware efficiency of Transformers during training, and during inference they have lower latency and significantly higher throughput. We scale Griffin up to 14B parameters, and explain how to shard our models for efficient distributed training.

https://arxiv.org/abs/2402.09910

How can we detect if copyrighted content was used in the training process of a language model, considering that the training data is typically undisclosed? We are motivated by the premise that a language model is likely to identify verbatim excerpts from its training text. We propose DE-COP, a method to determine whether a piece of copyrighted content was included in training. DE-COP's core approach is to probe an LLM with multiple-choice questions, whose options include both verbatim text and their paraphrases. We construct BookTection, a benchmark with excerpts from 165 books published prior and subsequent to a model's training cutoff, along with their paraphrases. Our experiments show that DE-COP surpasses the prior best method by 9.6% in detection performance (AUC) on models with logits available. Moreover, DE-COP also achieves an average accuracy of 72% for detecting suspect books on fully black-box models where prior methods give ≈ 4% accuracy. Our code and datasets are available at this https URL

https://arxiv.org/abs/2401.02115

Text-to-SQL models can generate a list of candidate SQL queries, and the best query is often in the candidate list, but not at the top of the list. An effective re-rank method can select the right SQL query from the candidate list and improve the model's performance. Previous studies on code generation automatically generate test cases and use them to re-rank candidate codes. However, automatic test case generation for text-to-SQL is an understudied field. We propose an automatic test case generation method that first generates a database and then uses LLMs to predict the ground truth, which is the expected execution results of the ground truth SQL query on this database. To reduce the difficulty for LLMs to predict, we conduct experiments to search for ways to generate easy databases for LLMs and design easy-to-understand prompts. Based on our test case generation method, we propose a re-rank method to select the right SQL query from the candidate list. Given a candidate list, our method can generate test cases and re-rank the candidate list according to their pass numbers on these test cases and their generation probabilities. The experiment results on the validation dataset of Spider show that the performance of some state-of-the-art models can get a 3.6% improvement after applying our re-rank method.

https://github.com/google-deepmind/recurrentgemma

RecurrentGemma is a family of open-weights Language Models by Google DeepMind, based on the novel Griffin architecture. This architecture achieves fast inference when generating long sequences by replacing global attention with a mixture of local attention and linear recurrences.

This repository contains the model implementation and examples for sampling and fine-tuning. We recommend most users adopt the Flax implementation, which is highly optimized. We also provide an un-optimized PyTorch implementation for reference.

https://blog.allenai.org/hello-olmo-a-truly-open-llm-43f7e7359222

Today, The Allen Institute for AI (AI2) has released OLMo 7B, a truly open, state-of-the-art large language model released alongside the pre-training data and training code. This empowers researchers and developers to use the best and open models to advance the science of language models collectively.

https://github.com/karpathy/llm.c

LLM training in simple, pure C/CUDA. There is no need for 245MB of PyTorch or 107MB of cPython. For example, training GPT-2 (CPU, fp32) is ~1,000 lines of clean code in a single file. It compiles and runs instantly, and exactly matches the PyTorch reference implementation. I chose GPT-2 as the first working example because it is the grand-daddy of LLMs, the first time the modern stack was put together.

https://www.theverge.com/2024/4/9/24124179/beeper-app-automattic-acquisition-matrix-messaging

Beeper, the upstart messaging app that attempts to corral all your messaging services into one inbox, is being acquired by Automattic, the giant that runs Wordpress.com, Tumblr, and a number of other hugely popular web properties

This is exciting especially given some of the recent developments in the EU. What's most interesting to me is how Beeper leverages open protocols like Matrix and for bridging capabilities where possible to provide secure messaging.

With more people moving to smaller spaces to communicate with their communities, being able to do so in a single place without everyone being on the same platform like in the early days of the internet is a welcome development.

Additional coverage from the Beeper blog.

What we’re announcing today…

- No more waitlist – Beeper is now available to everyone!

- Beeper has been acquired by Automattic

- Our new Android app is out of beta

- We’re renaming Beeper Cloud → Beeper (sorry for the confusion)

Today the announcement went out that we’re combining the best technology from Beeper and Texts to create a great private, secure, and open source messaging client for people to have control of their communications. We’re going to use the Beeper brand, because it’s fun. This is not unlike how browsers have evolved, where solid tech and encryption on top of an open ecosystem has created untold value for humanity.

A lot of people are asking about iMessage on Android… I have zero interest in fighting with Apple, I think instead it’s best to focus on messaging networks that want more engagement from power-user clients. This is an area I’m excited to work on when I return from my sabbatical next month.

https://arxiv.org/abs/2404.01037

Retrieval-Augmented Generation (RAG) is essential for integrating external knowledge into Large Language Model (LLM) outputs. While the literature on RAG is growing, it primarily focuses on systematic reviews and comparisons of new state-of-the-art (SoTA) techniques against their predecessors, with a gap in extensive experimental comparisons. This study begins to address this gap by assessing various RAG methods' impacts on retrieval precision and answer similarity. We found that Hypothetical Document Embedding (HyDE) and LLM reranking significantly enhance retrieval precision. However, Maximal Marginal Relevance (MMR) and Cohere rerank did not exhibit notable advantages over a baseline Naive RAG system, and Multi-query approaches underperformed. Sentence Window Retrieval emerged as the most effective for retrieval precision, despite its variable performance on answer similarity. The study confirms the potential of the Document Summary Index as a competent retrieval approach. All resources related to this research are publicly accessible for further investigation through our GitHub repository ARAGOG (this https URL). We welcome the community to further this exploratory study in RAG systems.

https://www.mollywhite.net/blogroll/

Bookmarking for reference.

I'm already subscribed to many of these websites and publications. However, there's several new ones I found that I think will eventually make their rotation into my blogroll.

New fine-tuning API features

Today, we’re introducing new features to give developers even more control over their fine-tuning jobs, including:

- Epoch-based Checkpoint Creation: Automatically produce one full fine-tuned model checkpoint during each training epoch, which reduces the need for subsequent retraining, especially in the cases of overfitting

- Comparative Playground: A new side-by-side Playground UI for comparing model quality and performance, allowing human evaluation of the outputs of multiple models or fine-tune snapshots against a single prompt

- Third-party Integration: Support for integrations with third-party platforms (starting with Weights and Biases this week) to let developers share detailed fine-tuning data to the rest of their stack

- Comprehensive Validation Metrics: The ability to compute metrics like loss and accuracy over the entire validation dataset instead of a sampled batch, providing better insight on model quality

- Hyperparameter Configuration: The ability to configure available hyperparameters from the Dashboard (rather than only through the API or SDK)

- Fine-Tuning Dashboard Improvements: Including the ability to configure hyperparameters, view more detailed training metrics, and rerun jobs from previous configurations

Expanding our Custom Models Program

- Assisted Fine-Tuning

Today, we are formally announcing our assisted fine-tuning offering as part of the Custom Model program. Assisted fine-tuning is a collaborative effort with our technical teams to leverage techniques beyond the fine-tuning API, such as additional hyperparameters and various parameter efficient fine-tuning (PEFT) methods at a larger scale. It’s particularly helpful for organizations that need support setting up efficient training data pipelines, evaluation systems, and bespoke parameters and methods to maximize model performance for their use case or task.

- Custom-Trained Model

In some cases, organizations need to train a purpose-built model from scratch that understands their business, industry, or domain. Fully custom-trained models imbue new knowledge from a specific domain by modifying key steps of the model training process using novel mid-training and post-training techniques. Organizations that see success with a fully custom-trained model often have large quantities of proprietary data—millions of examples or billions of tokens—that they want to use to teach the model new knowledge or complex, unique behaviors for highly specific use cases.

https://txt.cohere.com/command-r-plus-microsoft-azure/

Command R+ is a state-of-the-art RAG-optimized model designed to tackle enterprise-grade workloads, and is available first on Microsoft Azure

Command R+, like our recently launched Command R model, features a 128k-token context window and is designed to offer best-in-class:

- Advanced Retrieval Augmented Generation (RAG) with citation to reduce hallucinations

- Multilingual coverage in 10 key languages to support global business operations

- Tool Use to automate sophisticated business processes

https://github.com/ngruver/llmtime

By encoding time series as a string of numerical digits, we can frame time series forecasting as next-token prediction in text. Developing this approach, we find that large language models (LLMs) such as GPT-3 and LLaMA-2 can surprisingly zero-shot extrapolate time series at a level comparable to or exceeding the performance of purpose-built time series models trained on the downstream tasks. To facilitate this performance, we propose procedures for effectively tokenizing time series data and converting discrete distributions over tokens into highly flexible densities over continuous values. We argue the success of LLMs for time series stems from their ability to naturally represent multimodal distributions, in conjunction with biases for simplicity, and repetition, which align with the salient features in many time series, such as repeated seasonal trends. We also show how LLMs can naturally handle missing data without imputation through non-numerical text, accommodate textual side information, and answer questions to help explain predictions. While we find that increasing model size generally improves performance on time series, we show GPT-4 can perform worse than GPT-3 because of how it tokenizes numbers, and poor uncertainty calibration, which is likely the result of alignment interventions such as RLHF.

https://github.com/intel-analytics/ipex-llm

IPEX-LLM is a PyTorch library for running LLM on Intel CPU and GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max) with very low latency

https://arxiv.org/abs/2403.20329

Reference resolution is an important problem, one that is essential to understand and successfully handle context of different kinds. This context includes both previous turns and context that pertains to non-conversational entities, such as entities on the user's screen or those running in the background. While LLMs have been shown to be extremely powerful for a variety of tasks, their use in reference resolution, particularly for non-conversational entities, remains underutilized. This paper demonstrates how LLMs can be used to create an extremely effective system to resolve references of various types, by showing how reference resolution can be converted into a language modeling problem, despite involving forms of entities like those on screen that are not traditionally conducive to being reduced to a text-only modality. We demonstrate large improvements over an existing system with similar functionality across different types of references, with our smallest model obtaining absolute gains of over 5% for on-screen references. We also benchmark against GPT-3.5 and GPT-4, with our smallest model achieving performance comparable to that of GPT-4, and our larger models substantially outperforming it.

https://stability.ai/news/stable-audio-2-0

Stable Audio 2.0 sets a new standard in AI-generated audio, producing high-quality, full tracks with coherent musical structure up to three minutes in length at 44.1kHz stereo.

The new model introduces audio-to-audio generation by allowing users to upload and transform samples using natural language prompts.

Stable Audio 2.0 was exclusively trained on a licensed dataset from the AudioSparx music library, honoring opt-out requests and ensuring fair compensation for creators.

Deadline reports that The Martian writer Drew Goddard has been tapped to pen and direct another Matrix movie executive produced by Lana Wachowski. Currently, the new film has no title or projected premiere date, and there’s been no announcement as to whether franchise stars like Keanu Reeves, Carrie-Anne Moss, Laurence Fishburne, Yahya Abdul-Mateen II, or Jessica Henwick will return.

Not sure how to feel about this, but I'll end up watching anyway.

Good article. I felt this way when Google Reader and a few other services were shut down.

That being said, this is kind of a good thing.

Luckily, there are plenty of good podcast apps out there, like Pocket Casts, Overcast, Antennapod, and even Apple Podcasts.

This line basically says it all. Podcasts, like blogging, continue to be an open ecosystem and where the saying,"wherever you get your podcasts", is still going strong.

https://openai.com/blog/start-using-chatgpt-instantly

We’re making it easier for people to experience the benefits of AI without needing to sign up.

We may use what you provide to ChatGPT to improve our models for everyone. If you’d like, you can turn this off through your Settings - whether you create an account or not.

We’ve also introduced additional content safeguards for this experience, such as blocking prompts and generations in a wider range of categories.

https://www.theverge.com/24115039/danger-hiptop-t-mobile-sidekick-jump-button

Bring back the Sidekick! Ayaneo Slide is probably the closest to this today. Would love to see a smaller version of it running on Windows on ARM-based Snapdragon processors.

Before the iPhone, before Android, before webOS, a revolutionary soap bar of a phone made it incredibly easy to get shit done. The Danger Hiptop, better known as the T-Mobile Sidekick, made the internet portable and affordable like no phone before.

https://www.databricks.com/blog/announcing-dbrx-new-standard-efficient-open-source-customizable-llms

Today, we are excited to advance our mission by open sourcing DBRX, a general purpose large language model (LLM) built by our Mosaic Research team that outperforms all established open source models on standard benchmarks. We believe that pushing the boundary of open source models enables generative AI for all enterprises that is customizable and transparent.

We are excited about DBRX for three distinct reasons. First, it handily beats open source models, such as, LLaMA2-70B, Mixtral, and Grok-1 on language understanding, programming, math, and logic...

Second, DBRX beats GPT-3.5 on most benchmarks...

Third, DBRX is a Mixture-of-Experts (MoE) model built on the MegaBlocks research and open source project, making the model extremely fast in terms of tokens/second.

https://tianweiy.github.io/dmd/

Our one-step generator achieves comparable image quality with StableDiffusion v1.5 while being 30x faster.

Diffusion models are known to approximate the score function of the distribution they are trained on. In other words, an unrealistic synthetic image can be directed toward higher probability density region through the denoising process (see SDS). Our core idea is training two diffusion models to estimate not only the score function of the target real distribution, but also that of the fake distribution. We construct a gradient update to our generator as the difference between the two scores, essentially nudging the generated images toward higher realism as well as lower fakeness (see VSD). Our method is similar to GANs in that a critic is jointly trained with the generator to minimize a divergence between the real and fake distributions, but differs in that our training does not play an adversarial game that may cause training instability, and our critic can fully leverage the weights of a pretrained diffusion model. Combined with a simple regression loss to match the output of the multi-step diffusion model, our method outperforms all published few-step diffusion approaches, reaching 2.62 FID on ImageNet 64x64 and 11.49 FID on zero-shot COCO-30k, comparable to Stable Diffusion but orders of magnitude faster. Utilizing FP16 inference, our model generates images at 20 FPS on modern hardware.

https://www.404media.co/404-media-now-has-a-full-text-rss-feed/

We paid for the development of full text RSS feeds for Ghost-based publishers. Now we can offer them to our paid subscribers, and other Ghost sites can use the service too.

Our friends Anil Dash and Ernie Smith have recently written passionately and persuasively about the importance of RSS to the open web, and about how a technology that turns 25 years old this month remains both subversive and quite versatile. RSS-based distribution underpins a podcasting ecosystem that has allowed for shows to be distributed not just on Apple Podcasts but on Spotify, Google Podcasts, Pocket Casts, Overcast, and whatever other podcast player you might want to listen on. “Being able to say, ‘wherever you get your podcasts’ is a radical statement,” Dash wrote. “Because what it represents is the triumph of exactly the kind of technology that's supposed to be impossible: open, empowering tech that's not owned by any one company, that can't be controlled by any one company, and that allows people to have ownership over their work and their relationship with their audience.”

RSS has empowered podcasters, but that it needs a “creator economy rethink” for text.

https://stability.ai/news/stabilityai-announcement

Earlier today, Emad Mostaque resigned from his role as CEO of Stability AI and from his position on the Board of Directors of the company to pursue decentralized AI.

https://arxiv.org/abs/2312.00752

Foundation models, now powering most of the exciting applications in deep learning, are almost universally based on the Transformer architecture and its core attention module. Many subquadratic-time architectures such as linear attention, gated convolution and recurrent models, and structured state space models (SSMs) have been developed to address Transformers' computational inefficiency on long sequences, but they have not performed as well as attention on important modalities such as language. We identify that a key weakness of such models is their inability to perform content-based reasoning, and make several improvements. First, simply letting the SSM parameters be functions of the input addresses their weakness with discrete modalities, allowing the model to selectively propagate or forget information along the sequence length dimension depending on the current token. Second, even though this change prevents the use of efficient convolutions, we design a hardware-aware parallel algorithm in recurrent mode. We integrate these selective SSMs into a simplified end-to-end neural network architecture without attention or even MLP blocks (Mamba). Mamba enjoys fast inference (5× higher throughput than Transformers) and linear scaling in sequence length, and its performance improves on real data up to million-length sequences. As a general sequence model backbone, Mamba achieves state-of-the-art performance across several modalities such as language, audio, and genomics. On language modeling, our Mamba-3B model outperforms Transformers of the same size and matches Transformers twice its size, both in pretraining and downstream evaluation.

https://huggingface.co/blog/Pclanglais/common-corpus

We announce today the release of Common Corpus on HuggingFace:

- Common Corpus is the largest public domain dataset released for training LLMs.

- Common Corpus includes 500 billion words from a wide diversity of cultural heritage initiatives.

- Common Corpus is multilingual and the largest corpus to date in English, French, Dutch, Spanish, German and Italian.

- Common Corpus shows it is possible to train fully open LLMs on sources without copyright concerns.

https://huggingface.co/learn/ml-games-course/unit0/introduction

Welcome to the course that will teach you the most fascinating topic in game development: how to use powerful AI tools and models to create unique game experiences.

New AI models are revolutionizing the Game Industry in two impactful ways:

- On how we make games:

- Generate textures using AI

- Using AI voice actors for the voices.

- How we create gameplay:

- Crafting smart Non-Playable Characters (NPCs) using large language models.

This course will teach you:

- How to integrate AI models for innovative gameplay, featuring intelligent NPCs.

- How to use AI tools to help your game development pipeline.

In the same way that every little place in America used to have a printed newspaper, every little place in America could have an online local chronicle.

Broadly speaking, an online local chronicle is a collection of facts organized mostly in chronological order. The “pages” of the chronicle can be thought of as subsets of a community’s universal timeline of events. These online local chronicles could become the backbone of local news operations.

Nice project. Unfortunately, it's rare you get local news. I like publications / websites like Hoboken Girl and Block Club Chicago. I wish there were more of them in more cities and towns. I know they're there in some forms like Facebook Groups. Even better, it'd be great to have the websites for these publications be the main source of truth that then syndicated their content to the various platforms out there.

https://huggingface.co/blog/quanto-introduction

Quantization is a technique to reduce the computational and memory costs of evaluating Deep Learning Models by representing their weights and activations with low-precision data types like 8-bit integer (int8) instead of the usual 32-bit floating point (float32).

Today, we are excited to introduce quanto, a versatile pytorch quantization toolkit, that provides several unique features:

- available in eager mode (works with non-traceable models)

- quantized models can be placed on any device (including CUDA and MPS),

- automatically inserts quantization and dequantization stubs,

- automatically inserts quantized functional operations,

- automatically inserts quantized modules (see below the list of supported modules),

- provides a seamless workflow for a float model, going from a dynamic to a static quantized model,

- supports quantized model serialization as a state_dict,

- supports not only int8 weights, but also int2 and int4,

- supports not only int8 activations, but also float8.

https://arxiv.org/abs/2310.04475

Embeddings have become a pivotal means to represent complex, multi-faceted information about entities, concepts, and relationships in a condensed and useful format. Nevertheless, they often preclude direct interpretation. While downstream tasks make use of these compressed representations, meaningful interpretation usually requires visualization using dimensionality reduction or specialized machine learning interpretability methods. This paper addresses the challenge of making such embeddings more interpretable and broadly useful, by employing Large Language Models (LLMs) to directly interact with embeddings -- transforming abstract vectors into understandable narratives. By injecting embeddings into LLMs, we enable querying and exploration of complex embedding data. We demonstrate our approach on a variety of diverse tasks, including: enhancing concept activation vectors (CAVs), communicating novel embedded entities, and decoding user preferences in recommender systems. Our work couples the immense information potential of embeddings with the interpretative power of LLMs.

https://huggingface.co/spaces/Xenova/the-tokenizer-playground

Experiment with different tokenizers (running locally in your browser). I really love doing this thing

https://stability.ai/news/introducing-stable-video-3d

Today we are releasing Stable Video 3D (SV3D), a generative model based on Stable Video Diffusion, advancing the field of 3D technology and delivering greatly improved quality and view-consistency.

This release features two variants: SV3D_u and SV3D_p. SV3D_u generates orbital videos based on single image inputs without camera conditioning. SV3D_p extends the capability by accommodating both single images and orbital views, allowing for the creation of 3D video along specified camera paths.

Stable Video 3D can be used now for commercial purposes with a Stability AI Membership. For non-commercial use, you can download the model weights on Hugging Face and view our research paper here.

https://www.theverge.com/2024/3/18/24105157/nvidia-blackwell-gpu-b200-ai

Nvidia reveals Blackwell B200 GPU, the ‘world’s most powerful chip’ for AI

‘Built to democratize trillion-parameter AI.’

Nvidia says the new B200 GPU offers up to 20 petaflops of FP4 horsepower from its 208 billion transistors. Also, it says, a GB200 that combines two of those GPUs with a single Grace CPU can offer 30 times the performance for LLM inference workloads while also potentially being substantially more efficient. It “reduces cost and energy consumption by up to 25x” over an H100, says Nvidia.

https://arxiv.org/abs/2403.09611